Load Balancing

Overview

Overview

Two of the most critical requirements for any online service provider are availability and redundancy. The time it takes for a server to respond to a request varies by its current capacity. If even a single component fails or is overwhelmed by requests, the server is overloaded and both the customer and the business suffer.

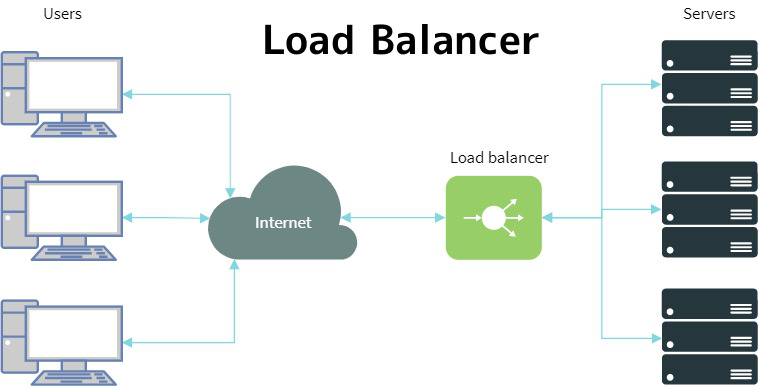

Load balancing attempts to resolve this issue by sharing the workload across multiple components. An incoming request can be routed from an overtaxed server to one that has more resources available. Load balancing has a variety of applications from network switches to database servers.

How Load Balancing Works

Service providers typically build their networks by using Internet-facing front-end servers to shuttle information to and from backend servers. These frontend servers contain load balancing software, which forwards requests to one of the backend servers based on resource availability. Load balancing software contains internal rules and logic to determine when and where to forward each request.

Here’s a rundown of how load balancing works:

1- A user opens a web page such as Google.com

2- A frontend server receives the request and determines where to forward it. Various algorithms can be used to determine where to forward a request, with some of the more basic algorithms including random choice or round robin. If there are no available backend servers, then the frontend server performs a predetermined action such as returning an error message to the user.

3- The backend server processes the request and generates a response. Meanwhile, the backend server periodically reports its current state to the load balancer.

4- The backend server returns a response to the front end server, which is then forwarded to the user.

If all goes well, the user will have received a response in a timely manner regardless of the state of the service provider’s network. If at least one front-end server and at least one back-end server is available, the user’s request is handled properly.

Benefits of Load Balancing

1- Load balancing makes it easier for system administrators to handle incoming requests while decreasing wait time for users

2-Users experience faster, uninterrupted service. Users won’t have to wait for a single struggling server to finish its previous tasks. Instead, their requests are immediately passed on to a more readily available resource.

3- Service providers experience less downtime and greater throughput. Even a full server failure won’t impact the end user experience as the load balancer will simply route around it to a healthy server.

4- System administrators experience fewer failed or stressed components. Instead of a single device performing a lot of work, load balancing has several devices perform a little bit of work.